In this tutorial, I would like to demonstrate how we can use Puppeteer to render contents generated by client side script.

Problem with Client Side SPAs

The problem almost every SPA faces is that contents are generated from client side, this means the data (such as list of names in a table) are generated the following way:

1. Page loads, client side javascript is executed

2. The script goes to fetch the data from elsewhere

3. Data is then inserted into a container div

If you view page source on a typical single page app, you will not see any of that content within the div because they were loaded from elsewhere during client side execution, this is bad for SEO mainly becaue crawlers cannot see what’s on your page unless they execute the scripts themselves. Even then, the rendering engine google uses for its crawler is many versions behind the latest release of Chrome, so it’s not guaranteed that the crawler sees your page the way you intended.

What is Puppeteer?

Puppeteer is a project created by the Chrome Dev Team that enabled you to access Chrome headlessly. This is extremely powerful, because you can now programmatically control chrome via code, and perform tasks such as crawling, screenshots, end to end tests and other automations all from one single tool.

Let’s get started, for this tutorial, we’re going to create a very simple express app with express generator:

npm i -g express-generatorthis will place a global express command in your terminal. Next we can create a simple starter project with

express ssrspaPuppeteerThis will generate a boilerplate project with express, we can then finish the project set up process with

cd ssrspaPuppeteer; npm i; npm startThe server should now be running on localhost:3000

Next we need to add a static HTML file to the public folder, let’s call this comments.html

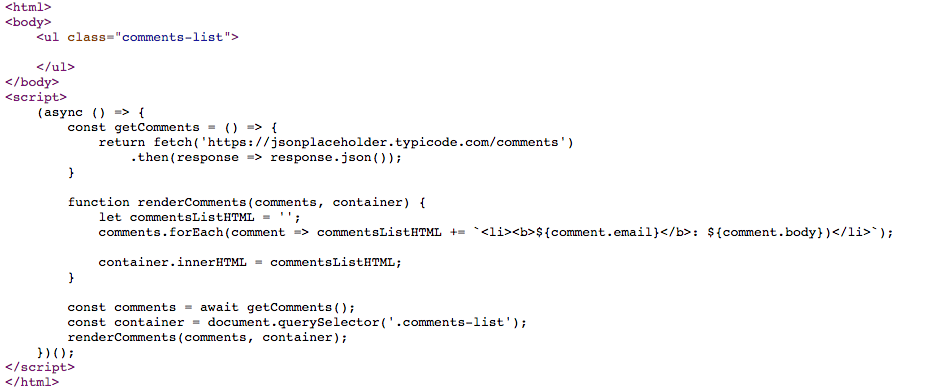

<html>

<body>

<ul class="comments-list">

</ul>

</body>

<script>

(async () => {

const getComments = () => {

return fetch('https://jsonplaceholder.typicode.com/comments')

.then(response => response.json());

}

function renderComments(comments, container) {

let commentsListHTML = '';

comments.forEach(comment => commentsListHTML += `<li><b>${comment.email}</b>: ${comment.body})</li>`);

container.innerHTML = commentsListHTML;

}

const comments = await getComments();

const container = document.querySelector('.comments-list');

renderComments(comments, container);

})();

</script>

</html>This client side script does two things: first, it fetches a list of comments from our dummy api from jsonplaceholder, next it pieces together each comment to a single string and then it inserts the string into the body of .comments-list. If async await or ES6 is unfamiliar to you, I have some tutorials on the site about them.

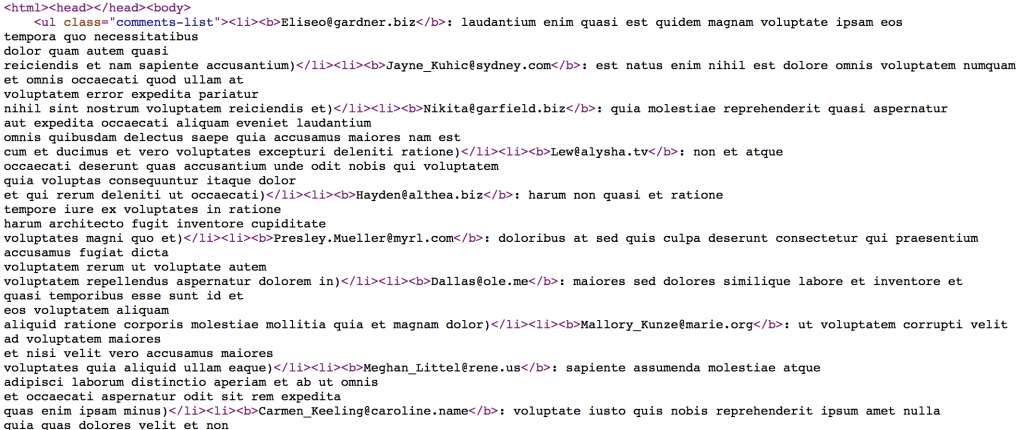

If we go to http://localhost:3000/comments.html we should see a simple page with about 200 comments on it. To see the problem with client side rendered page, we can view source on the page, and you’ll notice that the content within .comments-list is empty. Don’t worry, we’re going to fix that with Puppeteer.

Puppeteer and Express

Install the Puppeteer library with

npm i puppeteerCreate a helper file call ssr.js in the root path of your express project:

var puppeteer = require('puppeteer');

async function ssr(url) {

console.info('rendering the page in ssr mode');

const browser = await puppeteer.launch();

const page = await browser.newPage();

try {

await page.goto(url, {waitUntil: 'networkidle0'});

await page.waitForSelector('.comments-list');

} catch (err) {

console.error(err);

throw new Error('page.goto/waitForSelector timed out.');

}

const html = await page.content();

await browser.close();

return {html};

}

module.exports = ssr;This script initiates Puppeteer, tells it to visit the url specified, and have the browser wait for the existence of particular DOM element (.comments-list) and return the html representation of the content of the page.

the last thing we need to do is to bring this helper into express. Open app.js and include ssr.js

var ssr = require('./ssr.js');

then create a handler for the route /comments

app.use('/comments', async(req, res) => {

const { html } = await ssr(`${req.protocol}://${req.get('host')}/comments.html`);

return res.status(200).send(html);

});This essentially proxies /comments to /comments.html by running the content of comments.html (after client side javascript execution) through puppeteer, and returns the content and renders to the user.

Head over to http://localhost/comments and you should see the same page you saw before, but the difference is when you view the page source, you’ll notice each <li> within .comments-list are now present.

Of course there are a few optimizations you can do here, such as caching the result so you don’t have to run through puppeteer every page load. I challenge you to implement that yourself. I highly recommend the documentation page for Puppeteer on google’s web dev central. I hope this tutorial was useful to help you speed up your websites.

This tutorial was inspired by Eric Bidelman , you can read his official google blog here

Comments Or Questions? Discuss In Our Discord

If you enjoyed this tutorial, make sure to subscribe to our Youtube Channel and follow us on Twitter @pentacodevids for latest updates!